prepareTargetTable

You are looking at an older version of the documentation. The latest version is found here.

This procedure creates a target table if it does not exist and searches for the maximum of the incremental field.

Parameters

| Parameter | Description |

|---|---|

sourceObject | Fully-qualified name of the source. Can be an existing table or a stored procedure returning data. Can also be an arbitrary table specification in the form of string returning data: $column1|dataType1,column2|dataType3,column3[|string]; mandatory |

isSourceATable | TRUE or NULL if the source is a table, FALSE if it is a procedure |

additionalColumns | Columns to add to the source |

excludedColumns | Columns to exclude from the source |

target_table | Fully qualified name of the target table |

cleanupMethod | Cleanup method: DELETE or DROP. Any other value will throw an error. NULL or Whitespace will not perform any cleanup |

preview | Preview only, without creating a table |

incrementalField | Optional way to search for the maximum value in the target table |

incrementalFilter | Optional target table filter to search for the maximum incremental value, e.g. due to partitioning |

incrementalMinimum | If TRUE, requests the minimum value instead of the maximum one from the target table |

incrementalAlternateField | Field to return based on the extreme value of another incremental field (e.g., to return the ID of a record with maximum/minimum timestamp, specify ID here) |

Attributes

Attribute | Type | Description |

|---|---|---|

| string | Quoted fully-qualified name of the target table |

| string | Target data source name |

| string | Simple name of the target table |

| string | Template of the target data source |

| boolean | Shows whether data can be written (i.e. target table specified and not preview) |

| boolean | Shows whether the table was created |

| object | Maximum incremental value in the target table |

| clob | Comma-separated list of source columns |

| clob | Comma-separated list of target columns |

The procedure implements the initial target table setup workflow, applicable to most connectors. It generates the physical table with the structure of an existing object, which may be a table, a view, or a stored procedure returning data. As with most procedures in connectors, it returns data in the format applicable to a certain API endpoint or method; these objects can generally be used as source objects for this procedure. A fully-qualified object name is submitted to the sourceObject parameter. An additional parameter, isSourceATable, is used to determine the object type: table, view, or procedure, and eventually query SYS.Columns or SYS.ProcedureParams for the metadata internally.

Here's an example of such a procedure:

/* Procedure */

CREATE VIRTUAL PROCEDURE views.p1()

RETURNS (

x integer,

y string

)

AS

BEGIN

SELECT 1, 'One';

END;;

CALL UTILS.prepareTargetTable(

sourceObject => 'views.p1',

isSourceATable => FALSE,

target_table => 'dwh.TableBasedOnViewsP1',

preview => FALSE,

cleanupMethod => 'DELETE'

);;

/* View or table */

CREATE VIEW views.v1 AS

SELECT * FROM (CALL views.p1()) x;;

CALL UTILS.prepareTargetTable(

sourceObject => 'views.v1',

isSourceATable => TRUE,

target_table => 'dwh.TableBasedOnViewsV1',

preview => FALSE,

cleanupMethod => 'DROP'

);;If you want to specify additional columns, you can do so by providing a value to the additionalColumns parameter. This parameter allows specifying a column at the beginning, end, or both ends of the resultset, and the format depends on the exact location:

- Beginning: provide a comma-separated list;

- End: start this list with a comma;

- Both: specify a comma-separated list, but separate the chunks from the beginning and the end with a double comma.

The default data type is string, which can be modified by submitting the datatype after the column name separated by a vertical bar.

In some instances, procedures return more columns than should be in the target table. One example is flattening the data when we have columns corresponding to the main object and columns corresponding to the child object listed in the procedure output. In this case, use the excludedColumns parameter and separate the columns which should not be created in the target table with a comma.

CREATE VIRTUAL PROCEDURE views.p2()

RETURNS (

x integer,

y string,

z xml,

z_inner1 string,

z_inner2 string

)

AS

BEGIN

SELECT 1, 'One', XmlParse(Document '<root />'), 'inner1', 'inner2';

END;;

CALL UTILS.prepareTargetTable(

sourceObject => 'views.p2',

isSourceATable => FALSE,

additionalColumns => 'id|integer,author,,created_at|timestamp'

excludedColumns => 'z,z_inner1,z_inner2',

target_table => 'dwh.TableBasedOnViewsP2_withExcluded',

preview => FALSE,

cleanupMethod => 'DELETE'

);;The target_table and preview parameters are used to control the physical write. If target_table is set to NULL or preview is set to TRUE, the table will not be created, and this procedure will virtually be skipped. These parameters also affect the value of the returned writeData field, which will return TRUE, if previewis disabled and target_table is set, and FALSE otherwise. If you read writeData in the calling connector procedure, you no longer need to do checks with target_table and previewvariables, as these are encapsulated within the current procedure. An additional returned field tableCreated shows whether the target table was created during this very call to the procedure. It returns FALSE in all other cases.

This field comes in handy, for example, in the case of downloading a complex object with multiple nesting. In this case, the procedure will return TRUE on the first run, and the connector will create additional tables for the nested objects. If you do not need those additional tables, they may be dropped. During connector operation, there is no need to recreate those additional tables on subsequent runs. If they don't exist, and the target table was not created in this very run, the missing tables will be skipped from the population.

Additional output parameters for this workflow are datasourceName and tableShortName. They contain plain non-quoted names of the target data source and table. The table may be appended by dependent object suffix for convenience.

INSERT INTO #__LOCAL__temp_table

SELECT

quoted_target_table,

datasourceName,

tableShortName,

writeData,

tableCreated,

Cast(maxValue as timestamp) as maxValue,

source_columns,

target_columns

FROM (

CALL UTILS.prepareTargetTable(

sourceObject => '${vsname}.ProcedureWithComplexOutput',

isSourceATable => FALSE,

target_table => target_table,

preview => preview,

incrementalField => 'retrievedTimestamp',

excludedColumns => 'XmlField1,ClobField1,XmlField2'

)

) x;

DECLARE boolean writeData = SELECT writeData FROM #__LOCAL__temp_table;

DECLARE boolean tableCreated = SELECT tableCreated FROM #__LOCAL__temp_table;

DECLARE timestamp maxRetrievedTimestamp = SELECT maxValue FROM #__LOCAL__temp_table;

...

DECLARE string internal_target_table_Additional = '"' || datasourceName || '"."' || tableShortName || '_XmlField1"';

...

IF (

Not Exists (

SELECT * FROM SYS.Tables t

WHERE

LCase (t.VDBName) = LCase (Variables.vdb_name)

AND LCASE('"' || t.SchemaName || '"."' || t.name || '"') = LCASE(internal_target_table_Additional)

)

)

BEGIN

IF (tableCreated)

BEGIN

EXECUTE IMMEDIATE '

CREATE TABLE ' || internal_target_table_Additional || ' (

KeyID integer,

NestedField1 integer,

NestedField2 string

);' Without Return;

write_Additional = TRUE;

END

END

ELSE

write_Additional = TRUE;

...

WHILE (pagination)

BEGIN

...

IF (write_Additional)

BEGIN

INSERT INTO #__LOCAL__temp_table_Additional

SELECT m.KeyID, l.*

FROM #__LOCAL__temp_table m

CROSS JOIN XmlTable (

'/root/XmlField1'

PASSING Nvl(m.XmlField1, XmlElement(root))

COLUMNS

NestedField1 integer,

NestedField2 string

) l;

EXECUTE IMMEDIATE '

INSERT INTO ' || internal_target_table_Additional || '

SELECT *

FROM #__LOCAL__temp_table_Additional;' WITHOUT RETURN;

END

...

ENDThe returned template field contains the name of the template of the target data source. This is important if the data source is BigQuery. There may be additional logic involved, including no DELETE statements in such cases.

The incrementalField and incrementalFilter parameters are used for incremental downloads. If incrementalField is set, the procedure returns the maximum value of this field in the physical table. The value is returned in the maxValue field as object. This can be integer, long, date, or timestamp - any data type supporting the max function. Please use a case to the respective type within the connector. If the target table contains partitioned data, you may specify an additional filter on this data using the incrementalFilter parameter which will be appended to the aggregate query.

Here are some example calls and outputs:

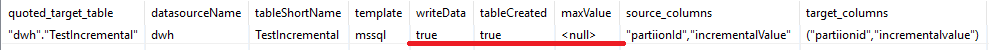

Example 1

CREATE VIRTUAL PROCEDURE views.p3()

RETURNS (

partiionId integer,

incrementalValue integer

)

AS

BEGIN

SELECT 1, 1;

END;;

CALL UTILS.prepareTargetTable(

sourceObject => 'views.p3',

isSourceATable => FALSE,

target_table => 'dwh.TestIncremental',

preview => FALSE,

incrementalField => 'incrementalValue',

cleanupMethod => 'DROP'

);;

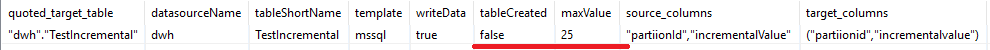

Example 2

INSERT INTO dwh.TestIncremental Values (1, 15);;

INSERT INTO dwh.TestIncremental Values (2, 25);;

CALL UTILS.prepareTargetTable(

sourceObject => 'views.p3',

isSourceATable => FALSE,

target_table => 'dwh.TestIncremental',

preview => FALSE,

incrementalField => 'incrementalValue',

cleanupMethod => 'DELETE'

);;

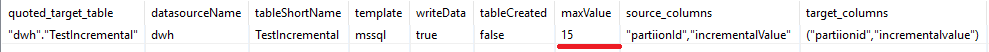

Example 3

CALL UTILS.prepareTargetTable(

sourceObject => 'views.p3',

isSourceATable => FALSE,

target_table => 'dwh.TestIncremental',

preview => FALSE,

incrementalField => 'incrementalValue',

incrementalFilter => 'WHERE partiionId = ' || 1,

cleanupMethod => 'DELETE'

);;

You can also specify additional parameters incrementalMinimum and incrementalAlternateField. If incrementalMinimum is set to TRUE, the minimum value will be searched in the target table rather than the maximum. If you specify the alternate field name within incrementalAlternateField, its value will be returned instead of the actual maximum value. The field value for the alternate field will be taken from the row with the maximum/minimum value of incrementalField. A sample scenario is returning the id of the record with the maximum timestamp.

Here is an example call to UTILS.getTableIntersection within this procedure. The output of this call is returned as source_columns and target_columns. The returned values may be consumed to be further used in dynamic SQL statements to list the column names for adaptation of the source and target metadata:

EXECUTE IMMEDIATE'

INSERT INTO ' || internal_target_table || target_columns || '

SELECT ' || source_columns || '

FROM #__LOCAL__temp_table;' WITHOUT RETURN;sourceObject updated with an arbitrary table specification available since v.2.4.9